Introduction: Build Agents That Deliver Business ROI

Clients don’t buy experiments; they buy outcomes. If you want to master how to build AI agents for client projects as a freelancer, anchor every decision in measurable business ROI. That means translating open-ended ideas into scoped workflows that consistently reduce cost, increase revenue, or accelerate operations.

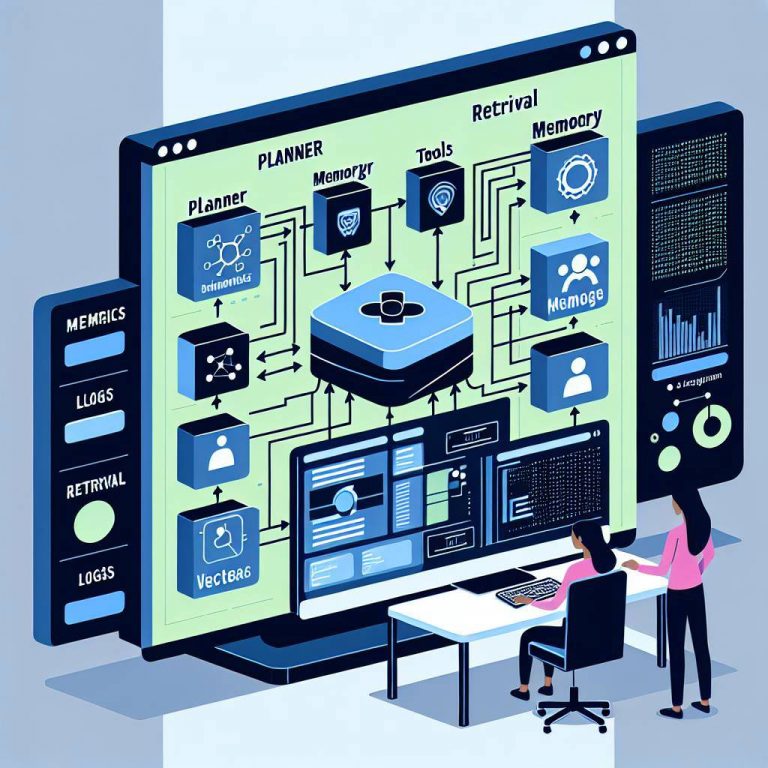

Production-grade agents are more than prompts. They require the right framework, a clear architecture, governed data access, rigorous evaluation, and a deployment plan with observability. Build with reliability in mind from day one, and you’ll ship agents clients trust.

Quick Summary: Frameworks, Architecture, Data, Testing, Deployment

- Choose a framework: Start with OpenAI Assistants for speed, or use LangChain, CrewAI, or AutoGen for complex multi-agent orchestration.

- Design architecture: Define tools, function calling, memory, and schedulers. Keep a modular backbone for future changes.

- Data strategy: Implement RAG with a vector store, plus policies and safety filters for compliant access.

- Testing and evaluation: Create golden datasets, trace behavior, and set cost/latency budgets aligned to KPIs.

- Deployment and monitoring: Package as an API, bot, or UI. Add observability and feedback loops. Price with transparent usage tiers.

Pick the Right Agent Framework: OpenAI Assistants, LangChain, CrewAI, AutoGen

Framework choice affects speed to market, maintainability, and cost. Select based on your client’s security needs, tool complexity, and hosting constraints.

- OpenAI Assistants: Excellent for rapid prototyping and hosted tool use with function calling, code interpreter, and file search. Ideal for SaaS integrations and low DevOps overhead.

- LangChain: Mature ecosystem for tools, RAG chains, and agents with strong observability via LangSmith. Great when you need custom pipelines and vector integrations.

- CrewAI: Multi-agent collaboration with roles and task decomposition. Useful for complex workflows like research, planning, and execution with specialized agents.

- AutoGen: Conversational multi-agent patterns and human-in-the-loop. Good for iterative problem solving, cooperative agents, and tool-augmented dialogues.

When strict data residency or self-hosting is required, lean on open-source stacks and self-managed LLMs. Otherwise, managed platforms speed delivery.

Scope the Use Case and KPIs: Objectives, Constraints, Success Metrics

Start with a short discovery sprint. Clarify what the agent does, who it serves, and how you’ll prove value. Convert ambiguous goals into measurable outcomes.

- Objectives: Automate lead qualification, triage support tickets, draft RFP responses, reconcile invoices, or enrich CRM records.

- Constraints: Budget ceilings, compliance (PII/PCI), rate limits, legacy systems, data freshness, and uptime targets.

- Success metrics: Task success rate, first-contact resolution, CSAT, cost per task, lead-to-demo conversion, latency at P95, and weekly active users.

Produce a brief scope doc with user stories, acceptance criteria, and a testable definition of done. This sets expectations and prevents scope creep.

Architecture and Tools: Tool Use, Scheduling, Memory, Function Calling

Design the minimal system that meets the KPIs. Keep each capability modular so you can swap models or tools without rewriting the agent.

- Tool use: Expose deterministic APIs (CRM, ticketing, SQL, search). Return structured JSON for reliable parsing.

- Function calling: Use native function calling to validate arguments and implement guardrails. Enforce schemas and timeouts.

- Memory: Separate session memory from long-term knowledge. Store ephemeral context in a state store; persist facts via RAG.

- Scheduling: Trigger jobs via cron, webhooks, or events (new lead, ticket created). Batch where possible to control costs.

Prefer deterministic orchestration for critical paths and agentic reasoning for research or synthesis. Log every tool call for auditing.

Data Strategy and Guardrails: RAG, Vector Stores, Policies, Safety

Give agents the right context without overexposing data. Retrieval-Augmented Generation (RAG) balances accuracy and confidentiality while reducing hallucinations.

- Vector stores: Pinecone, Weaviate, or Chroma for embeddings. Use semantic filters, metadata, and tenant isolation.

- Indexing: Clean, chunk (300–800 tokens), and deduplicate. Track versions so answers map to source documents.

- Policies: Implement role-based access control, PII redaction, and least-privilege credentials. Log access for audits.

- Safety: Add toxicity filters, prompt hardening, and output validation (JSON schema). Consider Guardrails or custom validators.

For regulated clients, document data flows, retention windows, and cross-border transfer rules. Align with SOC 2 and GDPR where relevant.

Build, Test, and Evaluate: Golden Datasets, Tracing, Cost/Latency Budgets

Treat agents like software. Establish repeatable tests that block regressions and keep costs predictable as prompts and models evolve.

- Golden datasets: Curate 30–200 real tasks with expected outputs or scoring rubrics. Include edge cases and noisy inputs.

- Metrics: Task success, tool-call accuracy, groundedness, citation rate, P95 latency, and cost per task.

- Tracing: Use LangSmith, OpenAI observability, OpenTelemetry, or W&B to inspect prompts, tool calls, and retries.

- Budgets: Set per-request token, latency, and spend ceilings. Fail fast or degrade gracefully when limits are hit.

Automate evaluation in CI. Compare prompts, models, and retrieval settings with A/B tests before promoting to production.

Deploy, Monitor, and Price: Observability, Feedback Loops, Packaging

Package the agent so clients can adopt it quickly and safely. Choose a deployment that matches their stack and security posture.

- Packaging: FastAPI/Express API, Slack or Teams bot, CRM plugin, or web widget. Ship Docker images and IaC for reproducibility.

- Observability: Centralize logs, traces, and metrics. Track cost per org, tool errors, and P95 latency with alerts.

- Feedback loops: Add thumbs-up/down, reason codes, and auto-ticket creation for bad outputs. Use feedback to retrain prompts or RAG.

- Pricing: Discovery fixed fee, build in milestones, then usage-based (token pass-through + margin) or per-seat. Offer SLAs for premium tiers.

Create a runbook covering failure modes, rate limits, and model fallbacks. This reduces on-call stress and speeds incident response.

Conclusion: From Prototype to Production-Ready Agents

Freelancers win repeat business by shipping reliable agents that move real metrics. Tie every design choice to outcomes, and you’ll turn pilots into retained engagements.

Choose a fit-for-purpose framework, architect clean tool boundaries, secure data with RAG and policies, and enforce quality with golden datasets and tracing. Deploy with clear visibility, pricing transparency, and a plan to improve continually.

This is how to build AI agents for client projects as a freelancer—responsibly, profitably, and at production standards.

FAQ: Framework Choices, Costs, Security, and Maintenance

Q: Which framework should I start with?

A: For speed, start with OpenAI Assistants. If you need custom chains, vector workflows, or self-hosting, use LangChain. Choose CrewAI or AutoGen for multi-agent collaboration.

Q: How do I control costs?

A: Set token ceilings, cache results, batch jobs, and enforce cheaper models for non-critical steps. Track cost per task and alert when thresholds are exceeded.

Q: What about security and compliance?

A: Use least-privilege API keys, encrypt data in transit and at rest, redact PII, and isolate tenants in your vector store. Document data flows for SOC 2/GDPR audits.

Q: How do I maintain and update agents?

A: Version prompts, index builds, and dependencies. Run nightly evaluations, monitor drift, and schedule quarterly optimization of prompts, tools, and retrieval settings.

Q: How should I price client work?

A: Fixed-fee discovery, milestone-based build, then metered usage or per-seat with SLAs. Include a support retainer for monitoring and iterative improvements.